Robotics & Coding

As a Gifted & Talented Student, I have been finding little time to participate in the FIRST FTC robotics arena. I've been involved with FIRST Robotics since Fourth Grade. Today, I'm exploring a wider scope academically, being involved with University Research in Astrophysics and Neurophysiology.

Last year, I tested through Programming (AP test) which was based on this years new FTC language, Java, but I'm finding Swift more enjoyable, forward and essentially more progressive than Java. I still participate and Mentor (JAVA) programming coding solutions for several teams in southeast Wisconsin, which remains fun. The last FTC season was a blast, but in the near future I'm going to be focusing more on Xcode, specifically Swift. At the University level, Astrophysical Research demands functionality with Python of course. All samples below:

Coding Examples . . .

In performing Academic Research one is required to test the legitimacy of ones questions starting with baselines. At the University of Wisconsin - Milwaukee campus I recently established such on our CCD camera so when it's attached to the telescope we know how to calculate effectiveness of true images for spectroscopy. Measuring Linearity and the Python program.

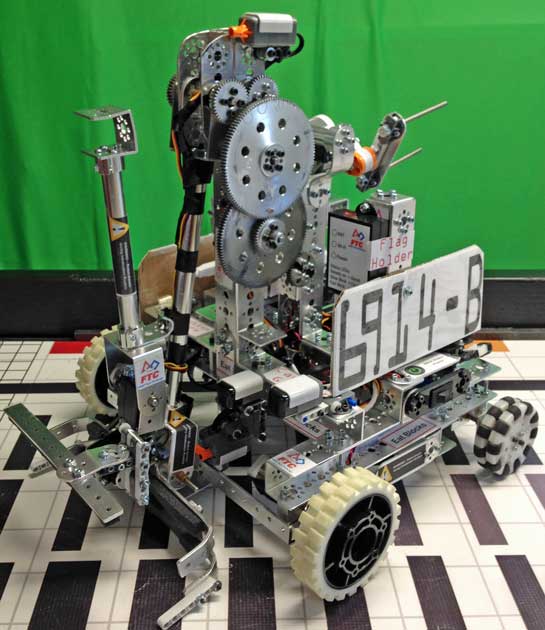

Zen42; simple Tetrix bot used in the 2013 FTC Block Party competitions. The links below are code

produced for educational purposes, shared here for anyone using RobotC; these are typical frames for a robot using the multiport sensor SMUX, with ported Gyro, IR, US, and Light sensors, along with two stop switches/touch sensors for the lifting arm. Gimbaled claw with one servo to close and hold blocks. The first link is designed for use directly with a Bluetooth connection; the second link is the same code for on the competition field using the Field Control System or WIFI through the Samantha module.

produced for educational purposes, shared here for anyone using RobotC; these are typical frames for a robot using the multiport sensor SMUX, with ported Gyro, IR, US, and Light sensors, along with two stop switches/touch sensors for the lifting arm. Gimbaled claw with one servo to close and hold blocks. The first link is designed for use directly with a Bluetooth connection; the second link is the same code for on the competition field using the Field Control System or WIFI through the Samantha module.

Zen42 Teleop w/o FCS v112214 | Zen42 Teleop for FCS v112214

Here's the autonomous action link on YouTube, showing the results of sensor use.

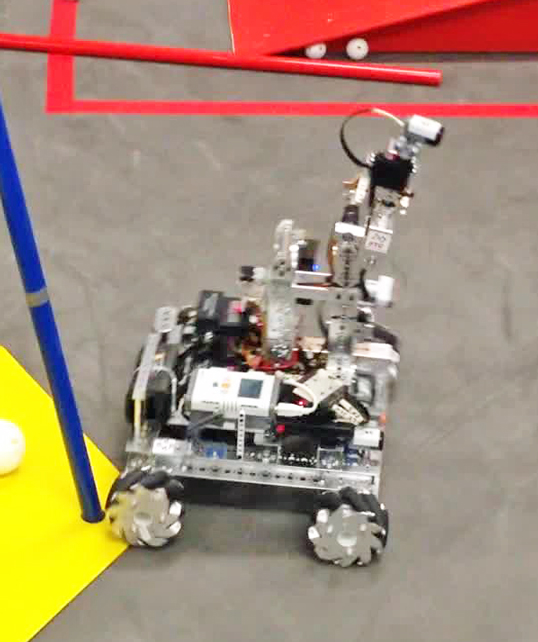

Zen42

decked out with Mecanum wheels for testing

purposes circa 122014. These wheels provide x & y

directional control vectoring, with anything in

between when the motive code is written correctly.

In this

YouTube video we demonstrate only the X and Y

axes with fixed speeds for lateral (Y) movements.

Imagine what these wheel combinations will do to

robotics when everyone starts using them? Zen42

Tele-Op only

RobotC Code (PDF).

SuperDroidRobots provides

PDF link to mounting and directional vectors.

We have a local

PDF copy

of same.

Here are more details of the above

FTC Sensor only platform robot using RobotC on an

NXT brick. This year we use the Directional Gyro, Ultra Sonic,

Inferred Seeker and Light Sensor,

mounted for testing and framing code

to later apply to any competition

(finished) robot. View one of the longest routes,

from this years FIRST FTC Cascade Effect

challenge,

where the final bot would perform a mechanical

function where you see pauses. View here at

YouTube,

with the autonomous program PDF

copy or text

copy. Finally, here's the Tele-op program

PDF and

text copies.

Note the initializeRobot and waitForStart

statements are commented out and need to be

removed for Wi-Fi multi-robot competitions.

These copies haven't been line by line commented, unfortunately

- but will be updated soon. Driver files (load

sequence) and

subfolder locations are very important and noted within

the program. Drivers do limit our use to run on

RobotC version 3.60, not the latest version.

Here are more details of the above

FTC Sensor only platform robot using RobotC on an

NXT brick. This year we use the Directional Gyro, Ultra Sonic,

Inferred Seeker and Light Sensor,

mounted for testing and framing code

to later apply to any competition

(finished) robot. View one of the longest routes,

from this years FIRST FTC Cascade Effect

challenge,

where the final bot would perform a mechanical

function where you see pauses. View here at

YouTube,

with the autonomous program PDF

copy or text

copy. Finally, here's the Tele-op program

PDF and

text copies.

Note the initializeRobot and waitForStart

statements are commented out and need to be

removed for Wi-Fi multi-robot competitions.

These copies haven't been line by line commented, unfortunately

- but will be updated soon. Driver files (load

sequence) and

subfolder locations are very important and noted within

the program. Drivers do limit our use to run on

RobotC version 3.60, not the latest version.

"Peace".

While I work"Be kind."

During construction"Return."

For updates

Research

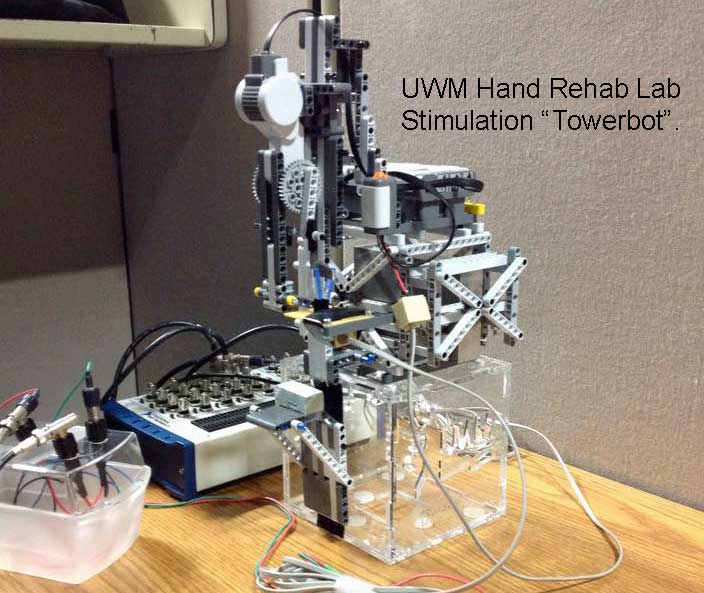

While in sixth (6th) grade (middle school) our Robotics Coach coordinated this Community University Project which allowed me to design, build, and modify the above unit for part of Dr. Na Jin Seo's Research Lab at UWM. This project went on and off for two years until all the bugs were caught and removed; the "Towerbot" stimulator above is now used within the data collection loop in active biometric study. This was my first robotic assignment at the University Research level.

Robot in Use

Volunteer above, in position to test "Towerbot" stimulator with Brain Wave Data Collection sensor cap. I used an NXT2 Brick, mainly Lego parts and RobotC for it's operation strictly, which had a mechanical parallel optic sensor circuit board which provided the data on stimulation strokes; all mechanical and brain data collection was managed by Kishor Lakshminarayanan, a UWM PhD student, on three other computers, mostly using variants of Labview's software products.